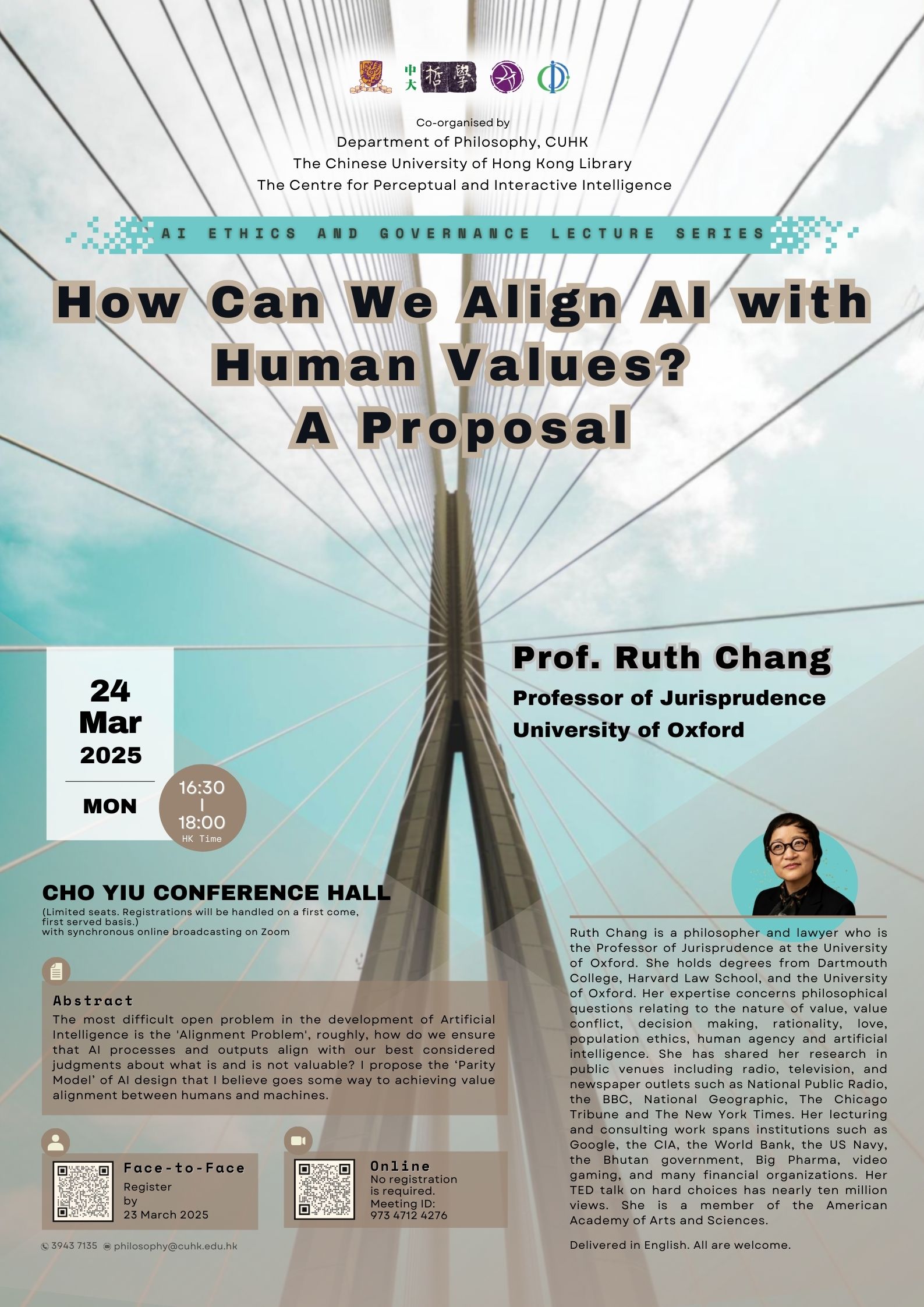

How Can We Align AI with Human Values? A Proposal (AI Ethics and Governance Lecture Series)

Prof. Ruth Chang |

|

4:30pm-6:00pm HK Time |

|

Cho Yiu Conference Hall with synchronous online broadcasting on Zoom |

Joining the Seminar face-to-face:

Limited seats for face-to-face seminar. Registrations will be handled on a first come, first served basis.

Register by 23 March 2025: https://cloud.itsc.cuhk.edu.hk/webform/view.php?id=13705343

Joining the Seminar online:

No registration is required.

Link: https://cuhk.zoom.us/j/97347124276

Meeting ID: 973 4712 4276

Enquiries:

Tel: 3943 7135

Email: philosophy@cuhk.edu.hk

Ruth Chang is a philosopher and lawyer who is the Professor of Jurisprudence at the University of Oxford. She holds degrees from Dartmouth College, Harvard Law School, and the University of Oxford. Her expertise concerns philosophical questions relating to the nature of value, value conflict, decision making, rationality, love, population ethics, human agency and artificial intelligence. She has shared her research in public venues including radio, television, and newspaper outlets such as National Public Radio, the BBC, National Geographic, The Chicago Tribune and The New York Times. Her lecturing and consulting work spans institutions such as Google, the CIA, the World Bank, the US Navy, the Bhutan government, Big Pharma, video gaming, and many financial organizations. Her TED talk on hard choices has nearly ten million views. She is a member of the American Academy of Arts and Sciences.

Abstract:

Arguably the most difficult open problem in the development of Artificial Intelligence is the ‘Alignment Problem’, roughly, how do we ensure that AI processes and outputs align with our best considered judgments about what is and is not valuable? Current strategies for achieving alignment, ranging from regulation to design interventions such as reinforcement learning from human feedback, prompt engineering, data scrubbing and the like, appear promising but are also riddled with spectacular failures. Proponents of such strategies hold out the hope that more data, more learning — and more resource-draining computing power — will eventually iron out alignment difficulties.

Common to all these approaches is the strategy of ‘fixing’ AI after it has been created. Instead of imposing ad hoc guardrails after AI has been created, I suggest a more radical approach to Alignment: reexamination of the fundamentals of AI design. In particular, I identify two fundamentally mistaken assumptions about human values made in current AI design, which suggest that i) no amount of data scrubbing, prompt engineering, or reinforcement learning from human feedback can achieve alignment given the nature of human values, and ii) an alternative AI design that corrects for these two mistakes could go a long way to achieving value alignment. I propose such an alternative, the ‘Parity Model’, which has two distinctive features. It is a ‘values-based’ approach to AI design, and it recognizes that machine-human value alignment requires development of what I call ‘small ai’.

Co-organised by the Department of Philosophy, CUHK, The Chinese University of Hong Kong Library and the Centre for Perceptual and Interactive Intelligence

Delivered in English.

All are welcome.